Hey NHibernate, Don’t Mess With My Enums!

Posted: April 16, 2011 Filed under: C#, DataBase, Mono.NET | Tags: .NET, C#, DataBase, Enum, Fluent NHibernate, Mono, NHibernate, ORM 2 CommentsSo I’ve been using Fluent NHibernate for a short while now. Initially I had to overcome some minor challenges, but since I got those out of the way it’s been pretty smooth sailing. One thing that stands out, which required more tinkering and timeshare than I would’ve liked is the way NHibernate handles the .NET enum type. Natively NHibernate allows you to save your enum’s value as a string or number property/column in the referencing object’s table. In other words, by default it doesn’t allow you to map your enum to its own separate table, and then let your objects refer to it through an association/foreign key. For NHibernate enums are primitive values, and not “entity objects” (logically speaking – ignoring the technical internal mechanics of .NET’s enum). I would argue that enums can be both a primitive string or number, or a more complex entity. Under certain circumstances an enum can be viewed as a simple “object” that consists of two properties:

- An Id, represented by the enum member’s number value

- And a name, represented by the enum member’s string name.

I’ve found that it’s very convenient to use the “entity object” version of enums for very simple, slow changing look-up data with a fair amount of business logic attached to it. For instance in a credit application app, you might only support 3 or 4 types of loans, but you know that over time app’s life, the company won’t add more than 2 or 3 new types of loans. Adding a loan type requires some additional work, and isn’t merely a matter of just inserting a new loan type into a look-up table. The reason is that a fair amount of the app’s business logic, mainly in the form of conditional logic statements, must also be adapted to accommodate the new loan type. From a coding perspective it’s very convenient to use enum types in these cases, because you can refer to the various options through DRY strong typed members, with a simultaneous string and number representation. So instead of

var loan = loanRepository.FindById(234);

var loanType = loanTypeRepository.FindById(123);

// ...

if (loan.Type == "PersonalLoan")

{

// ...

}

rather do

var loan = loanRepository.FindById(234);

if (loan.Type == LoanType.Personal)

{

//...

}

Okay, schweet, you get the point. Next logical question: How do you get NHibernate to treat your enums as objects with their own table, and not primitive values? To do this you have to create a generic class that can wrap your enum types, and then create a mapping for this enum wrapper class. I call this class Reference:

public class Reference<TEnum>

{

private TEnum enm;

public Reference(TEnum enm)

{

this.enm = enm;

}

public Reference() {}

public virtual int Id

{

get { return Convert.ToInt32(enm); }

set { enm = (TEnum)Enum.Parse(typeof(TEnum), value.ToString(), true); }

}

public virtual string Name

{

get { return enm.ToString(); }

set { enm = (TEnum)Enum.Parse(typeof(TEnum), value, true); }

}

public virtual TEnum Value

{

get { return enm; }

set { enm = value; }

}

}

The Reference class is pretty straight forward. All it does is translate the contained enum into an object with three properties:

- Id – the integer value of the enum member.

- Name – the string name of the enum member.

- Value – the contained enum member.

You might wonder why I didn’t bother to restrict the allowed generic Types to enums. Well, it so happens that .NET generics doesn’t allow you to restrict generic type declarations to enums. It allows you to restrict generic types to structs, and all sort of other things, but not to enums. So you will never be able to get an exact generic restriction for the Reference class. So I thought, aag what the hell, if I can’t get an exact restriction, then what’s the point anyways? I’ll have to trust that whoever is using the code, knows what he’s doing.

Now, for example, instead of directly using the LoanTypes enum, the Loan class’s Type property will be a Reference object, with its generic type set to the LoanTypes enum:

public class Loan

{

// ...

public Reference<LoanType> Type { get; set; }

// ...

}

This is not completely tidy, because to a degree the limitations of the data access infrastructure, i.e. NHibernate, force us to adopt a compromise solution that’s not necessary if we changed to something else. In other words things from the data infrastructure layers spills into the domain.

What’s left to do is (1) create a mapping for Reference<LoanType>, and (2) get NHibernate to use the right table name, i.e. LoanType, instead of Reference[LoanType]. Here the Fluent NHibernate mapping for Reference<LoanType>:

public class LoanTypeMap: ClassMap<Reference<LoanType>>

{

public LoanTypeMap()

{

Table(typeof(LoanType).Name);

Id(loanType => loanType.Id).GeneratedBy.Assigned();

Map(loanType => loanType.Name);

}

}

The above Fluent NHibernate mapping tells NHibernate to use whatever value property Id has for the primary key, and not generate one for it. You also have to explicitly specify the table’s name you’d like NHibernate to use, because you want to ignore “Reference” as part of the table name, and only use the enum type name.

And that’s it. You will now have a separate table called LoanType, with the foreign keys of other classes’ tables referencing the LoanType enum’s table. Just keep in mind that this solution might not always be feasible. For example it might not work too well when you write a multilingual application. Also should you want to get a pretty description for each enum’s member, for example “Personal Loan”, instead of “PersonalLoan” you’ll have to throw in some intelligent text parsing that split’s a text string before each uppercase character. Hopefully this post gave you another option to map your enum types with NHibernate.

REST Web Services with ServiceStack

Posted: March 21, 2011 Filed under: C#, InfoTech, Mono.NET | Tags: .NET, Alt.Net, Apache, C#, HTTP, IIS, JavaScript, JSON, mod_mono, Mono, Open Source, REST, ServiceStack, WCF, Web Services 12 Comments Over the past month I ventured deep into the alternative side of the .NET web world. I took quite a few web frameworks for a test drive, including OpenRasta, Nancy, Kayak and ServiceStack. All of the aforementioned supports Mono, except OpenRasta, that has it on its road-map. While kicking the tires of each framework, some harder than others, I saw the extent of just how far .NET has grown beyond its Microsoft roots, and how spoiled .NET developers have become with a long list of viable alternative .NET solutions from the valley of open source.

Over the past month I ventured deep into the alternative side of the .NET web world. I took quite a few web frameworks for a test drive, including OpenRasta, Nancy, Kayak and ServiceStack. All of the aforementioned supports Mono, except OpenRasta, that has it on its road-map. While kicking the tires of each framework, some harder than others, I saw the extent of just how far .NET has grown beyond its Microsoft roots, and how spoiled .NET developers have become with a long list of viable alternative .NET solutions from the valley of open source.

ServiceStack really impressed me, with its solid mix of components that speak to the heart of any modern C# web application. From Redis NOSQL and lightweight relational database libraries, right through to an extremely simple REST and SOAP web service framework. As the name suggests, it is indeed a complete stack.

Anyways, enough with the marketing fluff, let’s pop the bonnet and get our hands dirty. What I’m going to show you isn’t anything advanced. Just a few basic steps to help you to get to like the ServiceStack web framework as much as I do. You can learn the same things I’ll be explaining here by investigating the very complete ServiceStack example applications, but I thought some extra tidbits I picked up working through some of them should make life even easier for you.

Some Background Info On REST

I’m going to show you how to build a REpresentation State Transfer (REST) web service with ServiceStack. RESTful web services declare resources that have a URI and can be accessed through HTTP methods, or verbs (GET, PUT, POST and DELETE), to our domain services and entities. This is different from SOAP web services that require you to expose methods RPC style, that are ignorant of the underlying HTTP methods and headers. To implement a REST resource and its HTTP-methods in ServiceStack requires the use of two classes, RestService and RestServiceAttribute.

Another feature of REST is that data resources are encoded in either XML or JSON. However, the latest trend is to encode objects in JSON for its brevity and smaller size, rather than its more clunky counterpart, XML. We will therefore follow suit and do the same. Okay, I think you’re ready now to write your first line of ServiceStack code.

Create a Web Service Host with AppHostBase

The first thing you have to do is specify how you’d like ServiceStack to run your web services. You can choose to either run your web services from Internet Information Services (IIS) or Apache, or from the embedded HTTP listener based web server. Both of these approaches require you to declare a class that inherits from AppHostBase:

public class AppHost: AppHostBase

{

public AppHost()

: base("Robots Web Service: It's alive!", typeof(RobotRestResource).Assembly) {}

public override void Configure(Container container)

{

SetConfig(new EndpointHostConfig

{

GlobalResponseHeaders =

{

{ "Access-Control-Allow-Origin", "*" },

{ "Access-Control-Allow-Methods", "GET, POST, PUT, DELETE, OPTIONS" },

},

});

}

}

Class AppHost‘s default constructor makes a call to AppHostBase‘s constructor that takes 2 arguments. This first argument is the name of the web app, and the second argument tells ServiceStack to scan the Assembly where class RobotRestResource is defined, for REST web services and resources.

AppHostBase‘s Configure method must be overridden, even if it’s empty, otherwise you’ll get and exception. If you plan on making cross domain JavaScript calls from your web user interface (i.e. your web interface is written in JavaScript and hosted on a separate web site from your web services) to your REST resources, then adding the correct global response headers are very important. Together the two Access-Control-Allow headers tell browsers that do a pre-fetch OPTIONS request that their cross domain request will be allowed. I’m not going to explain the internals, but any Google search on this topic should yield sufficient info.

AppHostBase‘s Configure method must be overridden, even if it’s empty, otherwise you’ll get and exception. If you plan on making cross domain JavaScript calls from your web user interface (i.e. your web interface is written in JavaScript and hosted on a separate web site from your web services) to your REST resources, then adding the correct global response headers are very important. Together the two Access-Control-Allow headers tell browsers that do a pre-fetch OPTIONS request that their cross domain request will be allowed. I’m not going to explain the internals, but any Google search on this topic should yield sufficient info.

Now all that’s left to do is to initialize your custom web service host in Global.asax‘s Application_Start method:

public class Global : System.Web.HttpApplication

{

protected void Application_Start(object sender, EventArgs e)

{

new AppHost().Init();

}

}

The last thing you might be wondering about, before we move on, is the web.config of your ServiceStack web service. For reasons of brevity I’m not going to cover this, but please download ServiceStack’s examples and use one of their web.configs. The setup require to run ServiceStack from IIS is really minimal, and very easy to configure.

Define REST Resources with RestService

Now that we’ve created a host for our services, we’re ready to create some REST resources. In a very basic sense you could say a REST resource is like a Data Transfer Object (DTO) that provides a suitable external representation of your domain. Let’s create a resource that represents a robot:

using System.Collections.Generic;

using System.Runtime.Serialization;

[RestService("/robot", "GET,POST,PUT,OPTIONS")]

[DataContract]

public class RobotRestResource

{

[DataMember]

public string Name { get; set; }

[DataMember]

public double IntelligenceRating { get; set; }

[DataMember]

public bool IsATerminator { get; set; }

[DataMember]

public IList<string> Predecessors { get; set; }

public IList<Thought> Thoughts { get; set; }

}

The minimum requirement for a class to be recognized as a REST resource by ServiceStack, is that it must inherit from IRestResource, and have a RestServiceAttribute with a URL template, and that’s it. ServiceStack doesn’t force you to use the DataContractAttribute or DataMemberAttribute. The only reason I used it for the example is to demonstrate how to exclude a member from being serialized to JSON when it’s sent to the client. The Thoughts member will not be serialized and the web client will never know the value of this object. I had a situation where I wanted to have a member on my resource for internal use in my application, but I didn’t want to send it to clients over the web service. In this situation you have to apply the DataContractAttribute to your resource’s class definition, and the DataMemberAttribute to each property you want to expose. And that’s it, nothing else is required to declare a REST resource ffor ServiceStack.

Provide a Service for Each Resource with RestServiceBase

Each resource you declare requires a corresponding service that implements the supported HTTP verb-methods:

public class RobotRestService: RestServiceBase<RobotRestResource>

{

public override object OnPut(RobotRestResource robotRestResource)

{

// Do something here & return a

// new RobotRestResource here,

// or any other serializable

// object, if you like.

}

public override object OnGet(RobotRestResource robotRestResource)

{

// Do some things here ...

// Return the list of RobotRestResources

// here, or any other serializable

// object, if you like.

return new []

{

new RobotRestResource(),

new RobotRestResource()

};

}

}

In order for ServiceStack to assign a class as a service for a resource, you have to inherit from RestServiceBase, specifying the resource class as the generic type. RestServiceBase provides virtual methods for each REST approved HTTP-verb: OnGet for GET, OnPut for PUT, OnPost for POST and OnDelete for DELETE. You can selectively override each one that your resource supports.

Each HTTP-verb method may return one of the following results:

- Your IRestResource DTO object. This will send the object to the client in the specified format JSON, or XML.

- ServiceStack.Common.Web.HtmlResult, when you want to render the page on the server and send that to the client.

- ServiceStack.Common.Web.HttpResult, when you want to send a HTTP status to the client, for instance to redirect the client:

var httpResult = new HttpResult(new object(), null, HttpStatusCode.Redirect); httpResult.Headers[HttpHeaders.Location] = "https://openlandscape.wordpress.com"; return httpResult;

And that’s it. Launch your web site, and call the OnGet methof at /robot?format=json, or if you prefer XML /robot?format=xml. To debug your RESTful service API I can highly recommend the Poster Firefox plug-in. Poster allows you to manually construct HTTP commands and send them to the server.

You might be wondering what the purpose is of RobotRestResource that gets passed to each HTTP-verb method. Well, that is basically an aggregation of the posted form parameters and URL query string parameters. In other words if the submitted form has a corresponding field name to one of RobotRestResource’s properties, ServiceStack will automatically assign the parameter’s value to the supplied RobotRestResource. The same applies for query strings, the query strings ?Name=”TheTerminator”&IsATerminator=true: robotRestResource’s Name will be assigned the value of “TheTerminator” and IsATerminator will be true.

Using ServiceStack’s Built-In Web Service as a Service Host

The above discussion assumed that you’ll be hosting your ServiceStack service in IIS or with mod_mono in Apache. However, ServiceStack has another pretty cool option available, self hosting. That’s right, services can be independently hosted on their own and embedded in your application. This might be useful in scenarios where you don’t want to be dependent on IIS. I imagine something like a Windows service, or similar, that also serves as small web server to expose a web service API to clients, without the need for lengthy and complicated IIS setup procedures.

var appHost = new AppHost();

appHost.Init();

appHost.Start("http://localhost:82/");

To start the self hosted ServiceStack you configure your host as usual, and then call Start(…), passing the URL (with free port) where the web server will be accessed.

Why Use ServiceStack

For me one of the big reasons for choosing ServiceStack is that it has a solid library to build web services running on Mono. However, after using if for a while I found its easy setup and simple conventions very refreshing from the often confusing and cumbersome configuration of Windows Communication Foundation (WCF) web services.ServiceStack also does a much better job of RESTful services, than WCF’s current implementation. I know future versions of WCF will enable a more mature RESTful architecture, but for now it’s pretty much RPC hacked into REST. Another bonus was the complete set of example apps that were a great help to quickly get things working. So if you’re tired of WCF’s heavy configuration and you’re looking for something to quickly implement mature RESTful web services, then definitely give ServiceStack a try.

Fluent NHibernate on PostgreSQL

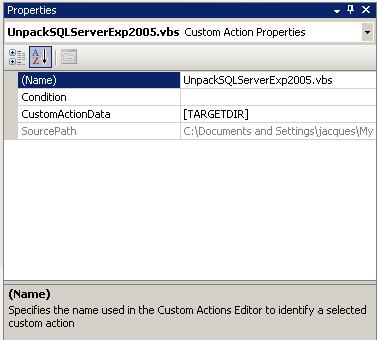

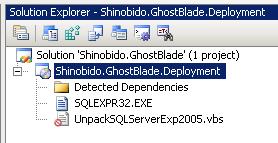

Posted: November 23, 2010 Filed under: C#, DataBase, Mono.NET | Tags: C#, Fluent NHibernate, Mono, NHibernate, Object Relational Mapper, ORM, Postgres, PostgreSQL 7 CommentsWhen you write your first Fluent NHibernate application with Mono/.NET based on the Getting started tutorial, you eventually discover that you require a few extra assembly-dll references not mentioned. For my Postgres (PostgreSQL) project my references are:

I won’t go into the detail of the matter, other than to say that many of these don’t give you a very clear indication as to what exactly is missing.

To configure Fluent NHibernate to work with Postgres you will need the following:

var connectionStr = "Server=127.0.0.1;Port=5432;Database=the_db;User Id=user_name;Password=password;"

ISessionFactory sessionFactory = Fluently

.Configure()

.Database(PostgreSQLConfiguration.Standard.ConnectionString(connectionStr))

.Mappings(m => m.FluentMappings.AddFromAssemblyOf<TypeOfFluentNHibernateMapping>())

.ExposeConfiguration(BuildSchema)

.BuildSessionFactory();

private static void BuildSchema(Configuration config)

{

// This NHibernate tool takes a configuration (with mapping info in)

// and exports a database schema from it.

var dbSchemaExport = new SchemaExport(config);

//dbSchemaExport.Drop(false, true);

dbSchemaExport.Create(false, true);

}

TypeOfFluentNHibernateMapping is a class that inherits from FluentNHibernate.Mapping.ClassMap<T>. This tells Fluent to load all ClassMappings from the assembly where this type is defined.

BuildSchema(…) creates the database’s schema based on the specified mapping configuration and recreates the tables and the rest of it in the database specified by the connection string. I included the call to the schema export’s drop method, because the code originates from my unit tests, where I drop & recreate the database on each test run.

So far I like Fluent NHibernate, and the only complaint I have so far is the way NHibernate (not Fluent) handles enums. It assumes you want to use the enum member’s string name. The way I like to store my enums, are to have a separate table for them.

Sexy Transactions In Spring.NET

Posted: July 28, 2010 Filed under: C#, DataBase, Mono.NET | Tags: .NET 4, ADO.NET, Enterprise Services, Entity Framework, NHibernate, POCO, Spring.NET, SQL Server, System.Transactions, Transaction Management 2 CommentsSpring.NET really has tons of handy features you can put to work in your application. One of them is its transaction management, that provides an implementation agnostic abstraction for your application. The first question you’re probably asking yourself is, why do you need to abstract away your transaction technology? The short answer is there are various ways of using transactions and different transaction technologies: ADO.NET, Enterprise Services, System.Transactions, and the different transaction implementations for other database technologies like ORMs (NHibernate). I won’t go into the detail of these, suffice to say that choosing, using and changing a transaction technology can become a complicated affair, and that Spring.NET protects your application from these ugly details. I encourage you to take a deep dive into the official Spring.NET documentation, should you want to know more about the details behind this.

What I did was, write a helper class that makes it a little easier and cleaner to use Spring.NET’s transaction management:

/// <summary>

/// Manages database transactions by wrapping executed

/// methods in a transaction.

/// </summary>

public static class Transaction

{

/// <summary>

/// Executes the specified method in a transaction.

/// </summary>

public static void Execute(Action method, bool commitChanges = true)

{

// Create a new transaction

Instantiate.New<ITransactionOperations>().Execute(delegate(ITransactionStatus transaction)

{

// If changes should not be committed, make

// sure we roll back once done

if (!commitChanges) transaction.SetRollbackOnly();

method();

return null;

});

}

}

/// <summary>

/// Instantiates objects based on their name.

/// </summary>

public static class Instantiate

{

#region Fields

private static IApplicationContext context;

#endregion

#region Get objects from ApplicationContext

/// <summary>

/// Retrieves the object with the specified name.

/// </summary>

/// <typeparam name="T">The type of object to return.

/// <param name="name">The name of the object.</param>

/// <returns>A newly created object with the type specified by the name, or a singleton object if so configured.</returns>

public static T New<T>(string name)

{

return (T)Context.GetObject(name);

}

/// <summary>

/// Retrieves the object of the specified type.

/// </summary>

public static T New<T>()

{

return (from obj in Context.GetObjectsOfType(typeof(T)).Values.Cast().ToList()

select obj).FirstOrDefault();

}

/// <summary>

/// Retrieves the object with the specified name, and constructor arguments.

/// </summary>

public static T New<T>(string name, object[] arguments)

{

return (T)Context.GetObject(name, arguments);

}

#endregion

#region Private methods

/// <summary>

/// Gets the context.

/// </summary>

/// <value>The context.</value>

private static IApplicationContext Context

{

get

{

if (context == null)

{

context = ContextRegistry.GetContext();

}

return context;

}

}

#endregion

}

Class Instantiate

The Instantiate class is a wrapper for Spring.NET’s IApplicationContext, with a few helper methods. It provides three overloads of the method New<T>, that instantiates new objects from the ApplicationContext configuration. The most important method is New<T> without any arguments. This method simply grabs the first instance of the requested Type it finds in the ApplicationContext. The other two variations allow you to instantiate a new object with a specific id.

With the help of the Instantiate class the Transaction class will obtain the specified transaction management from Spring’s ApplicationContext.

Class Transaction

This is where all the action really happens as far as database transactions go. The Transaction class has a single Execute method, that transparently wraps any block of code in a transaction. There are a small number of things you have to do to use Spring’s transaction management:

1. First get an instance of your chosen transaction implementation.

2. Call Execute with a delegate with an ITransactionStatus argument.

3. When you’re done, you have to return something, even if it’s null.

My goal is to remove the above 3 requirements, so I can just pass a block of code through to get it wrapped in a transaction. This is exactly what Execute achieves:

Transaction.Execute(() =>

{

// Get a FileTypeRepository

var fileTypeRepository = Instantiate.New();

// Add an object

fileTypeRepository.Add(fileType);

// Save the changes to the database

fileTypeRepository.SaveChanges();

});

Spring.NET provides 4 transaction implementations:

- Spring.Data.Core.AdoPlatformTransactionManager – local ADO.NET based transactions

- Spring.Data.Core.ServiceDomainPlatformTransactionManager – distributed transaction manager from Enterprise Services

- Spring.Data.Core.TxScopeTransactionManager – local/distributed transaction manager from System.Transactions.

- Spring.Data.NHibernate.HibernateTransactionManager – local transaction manager for use with NHibernate or mixed ADO.NET/NHibernate data access operations.

To configure your transaction manager for the ApplicationContext you need:

<objects xmlns="http://www.springframework.net"> <object id="transactionManager" type="Spring.Data.Core.TxScopeTransactionManager, Spring.Data" /> <object id="transactionTemplate" type="Spring.Transaction.Support.TransactionTemplate, Spring.Data" autowire="constructor" /> </objects>

Spring.NET’ provides a TransactionTemplate that handles all the necessary transaction logic and resources like commits, rollbacks, and errors. It frees you from getting involved with low level transaction management details, and provides all transaction management out of the box. If you desire you can directly access a transaction manager, through an IPlatformTransactionManager interface, to manage the details of your transaction process.

TransactionTemplate implements the ITransactionOperations interface. It has a constructor that requires a IPlatformTransactionManager instance. All transaction managers implement the IPlatformTransactionManager interface. This means TransactionTemplate contains the specified transaction manager. The required transaction manager is provided to the TransactionTemplate through autowiring of the constructor’s parameters. The autowiring searches through the objects and returns the first configured object that implements the IPlatformTransactionManager interface, which is transactionManager in our case.

Object transactionManager is an instance of TxScopeTransactionManager, that is in turn based on System.Transactions.

Transaction.Execute has a second optional parameter, commitChanges. The commitChanges argument defaults to true, so the transaction manager will always attempt to commit changes. I use it when I test my application, and I want all test data to be rolled back.

Based on the fact that it commitChanges is an optional parameter, you’ve probably figured out by now that I recompiled Spring.NET to run on .NET 4.

The example IFileTypeRepository is actually based on Entity Framework 4 with Plain Old CLR/C# Objects (POCO). Just in case you were wondering if Spring.NET’s transaction management works with Entity Framework.

Taking Another Look At Inheritance

Posted: May 11, 2010 Filed under: InfoTech, Mono.NET, SoftwareDevelopment | Tags: C#, Composition, Containment, Fragile Base Class, Inheritance, Interfaces, Object Oriented Programming, OOP, Polymorphism, Software Development 6 CommentsIn my first lesson on Object Oriented Programming (OOP) I was taught how amazing inheritance is for code reuse, and object classification. Very excited and with high expectations, I set off with this new concept from my OOP toolbox, using it as at every possible opportunity. As time went by, I realized all is not well and that inheritance has a lot of subtle and unanticipated implications to a system’s behavior and implementation, beyond the quick class definition. Inheritance often initially looks like the right solution, but only later does it become apparent that there are some unintended consequences, often when it’s too late to make design changes. I hope to provide the full story of inheritance here, and how to make sure that it has a happy ending.

Let’s start with the basics: Inheritance is a classification where one type is a specialization of another. The purpose of inheritance is to create simpler reusable code, by creating a common base class that shares its implementation with one or more derived classes. Be forewarned inheritance needs to be very carefully planned and implemented, otherwise you will get the reuse without the simplicity.

Deciding to use inheritance or not consists of the following steps:

- What is wrong with inheritance?

- When to use inheritance, and when to avoid it?

- If inheritance is not used, what alternatives are available?

- If inheritance is used, how should it be used?

Problems of Inheritance

So what is wrong with inheritance? The biggest problems of inheritance stem from its fragile base classes and the tight coupling between the base class and its derived classes.

Fragile base class

The fragile base class describes a problem that occurs when changes to a base class, even if interfaces remain intact, break correctness of the derived classes that inherit from it. Consider the following example:

1.

public class Employee

{

protected double salary;

protected virtual void increase(double amount)

{

salary += amount;

}

protected void receiveBonus(double amount)

{

salary += amount;

}

}

public class Contractor: Employee

{

protected override void increase(double amount)

{

receiveBonus(amount);

}

}

Next a developer updates class Employee as follows:

2.

public class Employee

{

protected double salary;

protected virtual void increase(double amount)

{

salary += amount;

}

protected void receiveBonus(double amount)

{

// salary += amount;

increase(amount);

}

}

public class Contractor: Employee

{

protected override void increase(double amount)

{

receiveBonus(amount);

}

}

The modification made to the base class Employee in step 2, will create an infinite recursive call in class Contractor, without any modifications to Contractor. This problem will silently be introduced into the system that uses Contractor, and only manifest at run-time when it causes memory problems on the server. In a nutshell this is referred to as the Fragile Base Class problem of inheritance. It is also the biggest problem that occurs when using inheritance to share functionality.

Tight Coupling and Weak Encapsulation

Encapsulation, also referred to as information hiding, is one of the pillars of good OO design. It is a technique for reducing dependencies between separate modules, by defining contractual interfaces. Other objects depend only on the contractual interface, and the module can be changed without affecting dependent objects, as long as the new implementation doesn’t require a new interface.

Base classes and derived classes can access the members of each other:

- The derived class uses base class methods and variables.

- The base class uses overridden methods in derived classes.

When base and derived classes become dependent on each other to this extent, it becomes difficult and even impossible to make changes to one class, without making corresponding changes in the other class.

Inheritance best practices

Implement “is a” through public inheritance

A base class constrains and influences how derived classes operate. If there is a possibility that the derived class might deviate from the base class’s interface contract, inheritance is not the right implementation technique. Consider using interfaces and/or composition under these circumstances.

Design and document for inheritance or prohibit it

Together with the ability to quickly write reusable code, comes the risks of adding complexity to an application. Therefore either plan for inheritance and allow and document it, or stop its use entirely by declaring a class as final (Java) or sealed (C#). This might not be always a plausible solution, when certain frameworks, like NHibernate for instance, forces you to declare persisted class members as virtual.

Adhere to the Liskov Substitution Principle

In a 1987 keynote address entitled “Data abstraction and hierarchy”, Barbara Liskov argued that you shouldn’t inherit from a base class unless the derived class is truly a more specific version of the base class. The derived class must be a perfectly interchangeable specialization of the base class. The methods and properties inherited from the base class should have exactly the same meaning in a derived class. If LSP is applied, inheritance can reduce complexity, because it allows you to focus on the general role of the objects.

Avoid deep inheritance hierarchies

The amount of complexity introduced into an application using deep inheritance hierarchies, completely outweighs its benefits. Inheritance hierarchies more than three levels deep, considerably increase fault rates. Inheritance should improve code reuse and reduce complexity. Consider applying the 7+-2 rule as a limit to the total number of derived classes in a hierarchy.

Specify what you want derived classes to inherit

Plan and control how derived classes can specialize a base class. Derived classes can inherit method interfaces, implementations or both. Abstract methods do not provide a default implementation to derived classes. Abstract methods only provide an interface, whereas virtual/overridable methods provide an interface and a default implementation. Non-overridable methods provide an interface and default implementation to derived classes, but derived classes cannot replace the inherited implementation with their own.

Don’t override a non-overridable member function

Don’t use the same names of private/non-overridable methods, properties and variables from the base class in derived classes. This is to reduce the likelihood that it might seem like a member is overridden in a derived class, but it is only a different member with the samem name.

Move common interfaces, data, and behavior as high as possible in the inheritance hierarchy

The higher interfaces, data, and behavior is moved up in the inheritance hierarchy, the easier it is for derived classes to reuse them. But at the same time don’t share a member with derived classes if it is not common among all the derived classes.

Don’t confuse data or objects with classes

There shouldn’t be a different class for every occurrence. For instance there should only be one Person class, and objects for Jack and Jill, and not a Jack and Jill class and one object of each. The warning signs should go off when you notice classes with only one object.

Be cautious when a base class only has a single derived class

Don’t use inheritance if there isn’t a clear need for it at the moment. Don’t try to provide for some future extension, if you’re not 100% sure what those future needs are. Rather focus on making current code easy to use and understand.

Be cautious of classes that override a base method with an empty implementation

This means that the overridden method isn’t common among all the derived classes, and that it is not suitable for inheritance. The way to solve this is to create another class that contains the implementation, and reference it from the derived classes that requires it (composition).

Prefer polymorphism to extensive type checking

Instead of doing several checks with if or case statements for an object’s type (typeof(Class) == object.GetType()), consider using derived classes that implement a common interface. This frees you from determining what the object is so that you can call the correct method.

Be cautious of protected data

When variables and properties are protected, you loose the benefits of encapsulation between base and derived classes. Allowing derived classes to directly manipulate protected data members, increases the likelihood that the base class will be left in an unexpected state, and create an error in the object.

Alternatives to Inheritance

Composition

In the terms of Object Oriented Programming (OOP), Composition, also referred to as Containment, is a fancy term that simply means a reference contained in another object. So instead of inheriting from the base class, you instantiate a new instance of the class whose functionality you need, and use that from the client class. Pretty standard stuff, but its implications are important when it comes to reusing functionality. Take the List class reused by the PersonList class using inheritance:

public class List<T> : IList<T>, ICollection<T>,

IEnumerable<T>, IList, ICollection, IEnumerable

{

// ...

}

public class PersonList: List<Person>

{

// ...

}

Now take the same Lits<T> class reused by the PersonList class using composition:

public class List<T> : IList<T>, ICollection<T>,

IEnumerable<T>, IList, ICollection, IEnumerable

{

// ...

}

public class PersonList

{

private List<Person> person = new List<Person>();

// ...

}

The example demonstates the following:

- Inheritance is quicker to implement, but discloses the inner workings of the inherited class. Child class PersonList already has all the methods available to store People. PersonList referencing List<Person> first needs to implement some public methods to make the private list usable to outside objects. The problem with the inheriting PersonList, is that all public methods and properties inherited from List<T> is also made available to outside objects. Since outside objects usually only require a small subset of these, you are providing less guidance on how your object should be used. Another way to look at it is to say you have a weak object contract. Hiding a private List<Person> variable behind a custom public methods provides a strict contract of how it should be used and hides any implementation details from outside objects. For instance should you wish to rather use a Hashtable, instead of a List, you can do so without worrying about it affecting any objects that are using PersonList. Inheritance is a little faster to use, but composition makes your code easier to use and last longer by reducing the impact of changes.

- It is easier (and safer) to change a method interface/definition of a class based on composition. Base classes are fragile and subclasses are rigid. The Fragile Base Class problem clearly demonstrates how base classes are fragile, when a change ripples through in unintended ways to derived classes, to eventually break an application. Derived classes are fragile because you cannot change an overrided method’s interface without making sure it is compatible with its base class.

- Composition allows you to delay the instantiation of the referenced object until required. This would be the case if the List<Person> variable in PersonList wasn’t immediately instantiated, but say only when it is called for the first time. With inheritance the base object is immediately instantiated together with the subobject. You do not have control over the base object’s lifetime.

- Composition allows you to change the behavior of an object at runtime. The referenced class can be changed while the application is running, without requiring changes to the code. This is especially true when interfaces are used to reference objects. When inheritance is used you can only change the base class by changing the code and recompiling the executable.

- Composition allows you to reuse functionality from multiple classes. With inheritance, the class is forced to reuse a single base class. Composition allows a class to use multiple classes. This also allows you to combine the functionality of several classes. For instance imagine an application with classes for different types of super heros. Some can create spider webs, others will fly like bats, and others will have retractable bone claws. Now image you can mix and match these to create the ultimate super hero, like one that can create spider webs, fly like a bat and have retractable bone claws. If you used inheritance you would be forced to choose only one type of super hero.

Interfaces

Interfaces are a great way to facilitate composition. You can almost say it is Composition By Contract (with interfaces you can build strong object contracts). When a class implements an interface it doesn’t get any functionality from a base class. An interface is only a contract telling objects what the class will do, but not providing any implementation to child classes. This means the Fragile Base Class problem does not affect classes implementing the interface, because there is no functinality flowing from the base class to the derived class.

Interfaces allow loosely coupled compositions, whereas inheritance and composition without interfaces can lead to tight coupling. Other objects bind only to an interface-contract and not the object implementing the interface. Therefore the underlying implementation is can be changed at any time during the application’s execution.

Patterns

There are a number of patterns that can be used as a more sophisticated replacement of inheritance. For now I will just mention them here, and maybe discuss each one in a future post:

- Strategy Pattern

- Part Pattern

- Business Entity Pattern

When to use inheritance and when to use composition

One of the most important goals you as a developer should have is to reduce the complexity of your application. Inheritance can quickly mutate from simple to a far reaching chain of complexity and confusion. Rather try to use composition/containment together with interfaces. You should therefore be biased against using inheritance, and only use it when you are absolutely sure that you cannot do without it:

- To share common data, use composition by placing the shared data in a common object to be referenced by the other classes.

- To share common behavior, inherit from a common base class that implements the common methods.

- To share common behavior and data, inherit from a common base class that implements the common methods and data.

- To allow classes to control their own interface use composition. Inherit if you want the base class to define the interface.

Inheritance hierarchies in a relational database

Relational databases do not cater for the concept of inheritance, and requires you to map your classes to your database schema (hence the term Object-Relational Mapping). Although there are a number of complications with mapping your classes to a database schema, because of an impedance mismatch, it should not stop you from using inheritance.

There are four strategies for implementing inheritance in a relational database:

- Map the entire class hierarchy to one table.

- Map each concrete class to its own table.

- Map each class to its own table.

- Map the classes to a generic table structure.

Map the entire class hierarchy to one table

In our Person example, using this approach you will create a table with columns for the combined properties for all the classes in the hierarchy, and an extra type column and boolean column to identity object’s type. Client, Employee and Contractor objects will all be stored in the same table. The table will have all the columns of the entire class hierarchy: Id, FirstName, LastName, Nationality, Company, Salary, and ContractTerm.

The main advantage of this strategy is simplicity. It is easy to understand, query and implement. Querying data is fast and easy because all the data for an object is in one table. Adding new classes is painless – all that’s required is to add the additional properties of the new classes to the table.

The disadvantages of this strategy are:

- Unconstrained use of tables with a growing number of empty columns. A child class will not use the columns of a its siblings. So if the hierarchy has a lot of child classes you end up with a table row with a lot of empty columns. A further problem is that it restricts the use of the NOT NULL constraint on columns, because a a column might be compulsory for one child class, but not the other, yet they share the same table. Therefore none of the child classes’ columns can have the NOT NULL constraint.

- Increased coupling of classes. All classes in the hierarchy share the same table, therefore a change to the table can affect them all.

Map each concrete class hierarchy to its own table

With this strategy you create a table for each concrete class implementation. Continuing with the Person example, you will create the following tables:

- Client, with columns Id, FirstName, LastName, Nationality and Company.

- Employee, with columns Id, FirstName, LastName, Nationality and Salary.

- Contractor, with columns Id, FirstName, LastName, Nationality, Salary and ContractTerm.

This strategy is also fairly simple and easy to understand, query and implement. It is simple to query beacuse all an object’s data comes from one table. Query performance is also good, because you don’t have to do multiple joins to get a single object’s data.

Maintenance and chaging a super class’s schema is a laborous process though. For instance, say you want to add Gender to the Person class? This means you have to update three tables: Client, Employee and Contractor.

Map each class to its own table

Mapping each class to its own table requires that the primary key of each child class also serve as the foreign key pointing to the related row in the parent table. The table of the class at the top of the hierarchy, in our case it’s Person, contains a row for every object in the hierarchy. The example will have the following tables:

- Person, with columns Id, FirstName, LastName, and Nationality. Id is not a foreign key, because Person is at the top of the inheritance hierarchy.

- Client, with columns Id and Company. Id is also a foreign key referencing the row in table Person that contains this object’s Person data.

Id cannot be an auto incrementing or generated identity column because the parent row in the Person table will determine its value.

- Employee, with columns Id and Salary. Just as in the case of Client, Id is also a foreign key referencing the parent row in table Person.

- Contractor, with columns Id and ContractTerm. Just like Client and Employee, Id is also a foreign key referencing the parent row the Employee table.

To make it easier to identify what kind of object a row in the root table represents, you can add a PersonType table and PersonTypeId attribute to the Person table. This will make it easier to get the type of Person in queries, because you don’t have to go through several joins to get to the last child table that tells you what the object’s actual type is.

Map classes to a generic table structure

The last, and most complex, way to store your objects and their inheritance hierarchy is using a fixed table structure that can store any object. The following diagram describes how you would lay out your mapping classes and corresponding tables to accommodate any mapping, inheritance well as relationships :

The easiest way to explain this mapping meta-data engine is to use an example. Consider the following Employee object: new Employee { FirstName = “John”, LastName = “Doe”, Nationality = “ZA”, Salary = 11000 }. The Type table will have two entries one for the Person type/class, and one for the Employee class. ParentTypeId of the Employee record in the Type table references the TypeId of the base Person class. IsSystem is used to identity native system types, such as string and int. The Property table stores all the member properties and variables of a class, so Employee will have one entry for the Salary property, and Person will have 3 entries for FirstName, LastName and Nationality. This takes care of the type and mapping meta-data.

Object instance state is persisted as follows. For the Employee called “John Doe” there will be one entry in the Object table for the instance, and 4 entries in the ObjectPropertyValue table, one for each property. A Property can store a primitive type, like a string, in which case the Value column/property will contain the actual value. If a Property references another Object, then the Value column/property will contain the ObjectId of the referenced object.

The above solution provides the most flexibility. However, it requires a complicated meta-data mapping and administration layer. Understanding, querying and reporting data becomes a very painful and slow process. This technique is only included for completeness. If this level of flexibility is required, I highly recommend that you rather look at a dedicated Object Relational Mapper (ORM) like NHibernate or Entity Framework. As a side note; I have not applied this specific solution in real life, so consider it only as a proof of concept, and not a tried and tested implementation.

References

- Inheritance is evil, and must be destroyed: part 1. BernieCode.

- Strategy Pattern.

- Composition vs. Inheritance In Java. Eric Herman.

- Mapping Objects to Relational Databases: O/R Mapping In Detail. Scott Ambler.

- Designing an Inheritance Hierarchy. Visual Basic Programming Guide.

- A Case For Sealing Classes In Java. Marina Biberstein, Vugranam C. Sreedhar, and Ayal Zaks.

- A Case for Class Sealing in Java. Haifa Vugranam C. Sreedhar, Watson Ayal Zaks, and Haifa.

- Composition versus Inheritance: A Comparative Look at Two Fundamental Ways to Relate Classes. Bill Venners.

- Inheritance versus composition: Which one should you choose? Bill Venners.

- Java Ranch Forums.

- Code Complete, 2nd Edition, Steve McConell

Microsoft Cloud Computing In A Nutshell

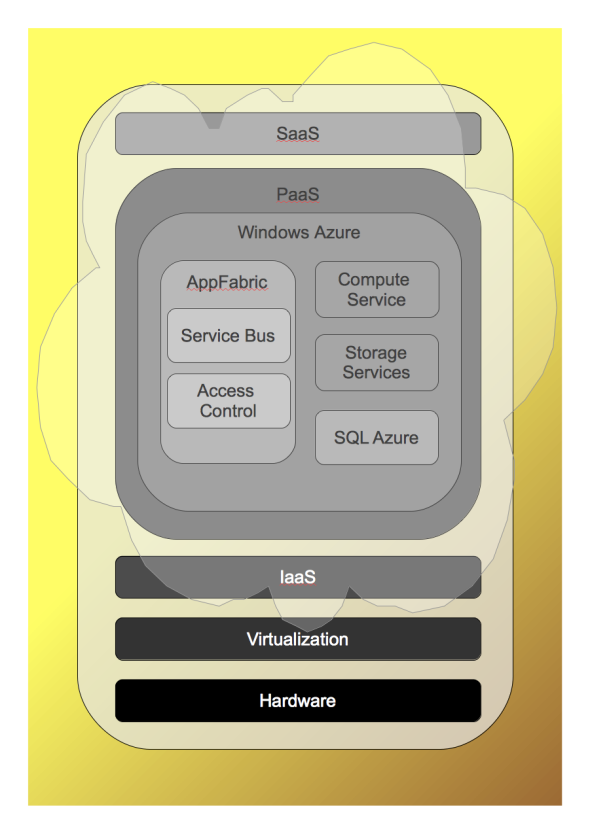

Posted: January 4, 2010 Filed under: InfoTech, Mono.NET, SoftwareDevelopment, Web | Tags: .NET Services, Amazon EC2, AppFabric, AppFabric Access Control, AppFabric Service Bus, Cloud Burst, Cloud Computing, Enterprise Cloud Computing, Google AppEngine, Hypervisor, IaaS, Infrastructure as a Service, PaaS, Platform as a Service, Software as a Service, SQL Azure, Virtualization, Windows Azure, Windows Azure Storage Services Leave a commentCloud computing is when computing resources and applications are virtualized and accessed as a service on the Internet. The term cloud is used as a metaphor for the Internet. Cloud computing builds on a stack of technologies, where those higher up are directly or indirectly dependent on those before it. The different layers in the stack are (1) hardware, (2) virtualization, (3) Infrastructure as a Service, (4) Platform as a Service and (5) Software as a Service.

Virtualization, is a vital component of the cloud computing stack. Vendors providing cloud computing services make heavy use of virtualization to provide Infrastructure as a Service (IaaS) or to optimize their own data centers. Virtualization is when multiple virtual resources are created against an emulation layer that makes them believe they have exclusive access to the underlying hardware. It is also when a single virtual resource is created from multiple hardware components. Different types of resources are virtualized in the enterprise today: Desktops, applications, servers, networks, storage, and application infrastructure.

For the rest of this discussion we refer to server virtualization when we talk about virtualization. Server virtualization is based on a hypervisor. A hypervisor is a virtual machine monitor that intercepts calls to the hardware. It provides virtual memory and processor/s allowing multiple operating systems to run on the host computer concurrenctly.

- Type 1 hypervisor, runs directly of the host computer’s hardware, bypassing its own operating system. Examples include Microsoft Hyper V, and VMWare ESX.

- Type 2 hypervisor, runs as an application on the host computers operating system. Examples include Microsoft Virtual PC, Microsoft Virtual Server, and Sun VirtualBox.

Infrastructure as a Service (IaaS), makes infrastructure available on demand as a fully outsourced service, based on the client’s consumption and specifications. Amazon’s Elastic Compute Cloud (EC2) is probably the best example to demonstrate the IaaS concept. Amazon EC2 provides a web service and command line tool to clients through which an Amazon Machine Image can be booted to create a virtual machine instance containing the desired software. You can configure servers with your desired operating system, web servers, database servers, and any other software you need. You can also adjust your processing and storage capacity in minutes, and only pay for the capacity you use.

Platform as a Service (PaaS), also offers compute power, storage, and networking infrastructure over the web, not directly as servers, but rather as a runtime environment for hosting applications or application components. Windows Azure Services PlatformGoogle AppEngine and fall under this category. When you build an application on PaaS, it uses complete application services, specific to the framework, like workflow, storage or security. This is distinct from IaaS, where the developer needs to deal with low level resources, such as databases and servers. For instance, when you use Windows Azure’s Compute Service, you don’t have to make decisions about the application server that will be hosting the application – its disk size, operating system, processor and those kind of things. You just provide your web application to the Compute Service, and it takes care of the rest.

Software as a Service (SaaS). A web application or service provided by a vendor over the Internet, charged on a per-use basis. Examples of SaaS are SalesForce.com, Windows Live Hotmail, and GitHub.

Azure Services Platform

Finally we can take a look at how Microsoft positions itself for cloud computing. Microsoft’s cloud service platform, called Windows Azure Platform, is the base framework for hosting and management of Microsoft’s cloud services, and the development of your cloud based apps. Windows Azure integrates with Visual Studio, making it easy to develop apps using Microsoft’s cloud services. Windows Azure serves as a type of application server that can host your applications, and provide it with high performance computing and storage. You can only use the specific services required, so you are not forced to host your entire client application on Windows Azure. But if you’re developing a web application that uses SQL Azure it will be beneficial to host the client application on Windows Azure as well, because it will live close the database, in the same data centre.

The Windows Azure Compute Service hosts .NET web applications. Windows Azure Storage Services provide various options for storing application data:

- Blob service, for storing text or binary data .

- Queue service, for reliable, persistent messaging between services .

- Table service, for structured storage that can be queried.

Two separate cloud services run on Windows Azure, AppFabric and SQL Azure. These provide specific features that will be used in your applications. Combining these service offerings with the Windows Azure foundation forms the complete Windows Azure Services Platform:

AppFabric

AppFabric provides advanced network and security services to enable and control apps. Previously called .NET Services, AppFabric delivers this functionality through two services Access Control and Service Bus. .NET Services previously included .NET Workflow Service, but it was decided to release this in a later version.

- AppFabric Service Bus (ASB): Provides a relay service that allow clients and services to discover, publish and communicate in a secure, consistent and reliable way. The ASB makes it easy to expose services securely that live behind firewalls, network address translation (NAT) boundaries, and/or have frequently changing, dynamically assigned IP addresses. It allows cloud-based workflows to communicate with on-premise applications by traversing firewalls and NAT equipment. To the client application, apart from a few exceptions, the ASB is a Windows Communication Foundation (WCF) style service,with similar protocols, bindings, end-points, and behaviors. One of the main differences between the ASB service and a regular WCF service is that the ASB service lives in the web. ASB supports both REST and SOAP based services.The ASB provides:

- Federated namespace. Enables services to be published in a hierarchical namespace, with stable end-point addresses.

- Service directory. Service end-points are be published to the ASB and clients can locate them through a Atom/RSS feed.

- Message broker. Provides a publish/subscribe event bus, where messages are broadcasted in different categories, and clients filter them based on the relevant categories.

- Relay and connectivety. Ensures clients and services can reach each other regardless of their location, or whether it changes. This is one of the features that I am most excited about, as it allows you to make your services available to client apps over the Internet from your local network, regardless of whether it might sit behind a firewall.

ASB uses the AppFabric Access Control for its access control. This means all participants must first obtain a security token from the AAC, before they can communicate through the relay service.

- AppFabric Access Control (AAC): Provides access control to applications and services based on federated, claims-based identity providers, including enterprise directories and web identity systems such as Windows Live ID. What stands out is that ACC uses claims-based identity providers, and is part of te broader change from domain-based to claims-based authetication.If you’ve been using NTLM or Kerberos in your apps, then you know what domain-based authentication is. Domain-based authentication allows only a single token in a fixed format, with a specific set of claims or information dictated by the authentication standard. Claims-based authentication allows multiple token formats, with arbritary information, or claims. This means an application that uses claims-based authentication can support different authentication types, and use extra information encoded in a token as claims. The end result of this is that claims-based authentication can be used in more diverse environments, such as on the Internet and a Windows domain. Claims-based authentication also allows an identity, called a federated identity, coming from one security domain, to access resources on a different security domain.

SQL Azure

SQL Azure is Microsoft’s SQL Server in the cloud. SQL Azure is based on the same Microsoft SQL Server technologies that you have been using in your apps until now. Applications use the Tabular Data Stream (TDS) protocol to communicate with SQL Server, through one of the client libraries such as ADO .NET, Open Database Connectivity (ODBC), Java Database Connectivity (JDBC), and the SQL Server driver for PHP. Specific to ADO.NET it supports .NET Framework Data Provider for SQL Server (System.Data.SqlClient) from the .NET Framework 3.5 Service Pack 1. This means you use exactly the same database libraries for SQL Azure, that you will use for SQL Server. An added benefit to this is, that it makes it easy to migrate current applications from SQL Server to SQL Azure.

Some Final Thoughts

There are several benefits to using cloud computing:

- Dynamic computing resources. Resources can quickly adjust to match new demand for computing resources. This frees enterprises from estimating the growth in applications and their resource consumption and make upfront investments that isn’t fully utilised. Should the company experience a reduction in business due to a downturn or similar negative event, computing resources can immediately adjust, and cash flows automatically adjust accordingly.

- Cost. Think of all the costs an organization can save from a reduction in the installation, upgrading and maintenance of servers, data centers, networks, cabling, security, file systems, backups, and so forth. Not all projects will see an automatic reduction of costs as a result of cloud computing, especially if one only looks at it from a single project. But if you look at all the projects, over a number of years, then I believe an organization can see a dramatic reduction in costs if cloud computing is adopted in some areas.

- Complexity. By outsourcing computing resources to the cloud, organizations reduce the overall complexity of their systems.

- Capacity. Cloud computing provides cheap access to huge quantities of computing resources. Imagine a small company with an application that provides high definition movies and music over the web. An application like this will require vast amounts of storage and processing power, much more than what a small company can comfortably handle. To manage a data centre that will allow an application like this to run comfortably will be a huge burden to the company.

- Service orientation. Organizations can now gain some of the same benefits of adopting a Service Oriented Architecture (SOA) for their applications, by using cloud-based components in their applications. To a large degree infrastructure and other computing resources are now contract driven, providing a consistent service layer between the resource and the client application.

There are also some interesting emerging cloud computing models:

- Enterprise cloud computing. Using the same technology behind cloud computing inside the enterprise. This means that the services that are made available through the cloud is only accessible to applications running on the enterprise’s private network.

- Cloud bursting. A hybrid model where computing resources are accessed just like before in the traditional way, but when a resource hits a specified threshold the system uses cloud computing resources for the demand overflow.

References

- Briefing: Cloud Computing, Erica Naone, Technology Review.

- Design Considerations for S+S and Cloud Computing, Fred Chong, Alejandro Miguel, Jason Hogg, Ulrich Homann, Brant Zwiefel, Danny Garber, Joshy Joseph, Scott Zimmerman, and Stephen Kaufman, Microsoft Architecture Journal.

- Building Distributed Applications With .NET Services, Aaron Skonnard, MSDN Magazine.

- Working With The .NET Service Bus, Juval Loy, MSDN Magazine.

- Windows Azure Tools for Microsoft Visual Studio (November 2009).

- Windows Identity Foundation Simplifies User Access for Developers.

- .NET Services: Access Control Service Drilldow, Justin Smith.

- Digital Identity for .NET Applications: A Technology Overview, David Chappell, MSDN Library.

- Understanding Public Clouds: IaaS, PaaS, & SaaS, Keith Pijanowski.

- An Introduction to Virtualization, Scott Delap, InfoQ.

- Amazon EC2 AMI Tools.

- Getting Started with AppFabric Service Bus, MSDN Library.

- Federated Identity: Patterns in a Service oriented World, Jesus Rodriguez and Joe Klug, Microsoft Architecture Journal.

- How the Cloud Stretches the SOA Scope, Lakshmanan G and Manish Pande, Microsoft Architecture Journal.

Call SOAP-XML Web Services With jQuery Ajax

Posted: September 25, 2009 Filed under: Mono.NET, Web | Tags: Ajax, JavaScript, jQuery, SOAP, Web Development, Web Services, XML 50 CommentsjQuery‘s popularity has really exploded over last few years. What really stands out about jQuery is its clear and consise JavaScript library. Other things I’ve come to appreciate over time is its deep functionality and completely non-intrusive configuration. Recently, to improve the responsiveness of my user interfaces, I decided to use jQuery’s Ajax to call SOAP-XML web services directly. I struggled to find good information on how this can be done. There are a lot of good examples on the web that demonstrate how to use JSON web services from jQuery Ajax, but almost none for SOAP-XML web services.

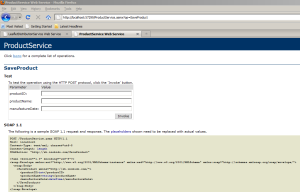

Before you can call the web service from client script you need to obtain the web service operation’s WSDL. If you’re using a .NET web service, you can just point your browser to the web service’s URL, and click on the operation’s name.

The example web service operation I’m using, SaveProduct, has the following schema:

POST /ProductService.asmx HTTP/1.1 Host: localhost Content-Type: text/xml; charset=utf-8 Content-Length: length SOAPAction: "http://sh.inobido.com/SaveProduct" <?xml version="1.0" encoding="utf-8"?> <soap:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap="http://schemas.xmlsoap.org/soap/envelope/"> <soap:Body> <SaveProduct xmlns="http://sh.inobido.com/"> <productID>int</productID> <productName>string</productName> <manufactureDate>dateTime</manufactureDate> </SaveProduct> </soap:Body> </soap:Envelope>

The method that will contact this operation looks like this:

var productServiceUrl = 'http://localhost:57299/ProductService.asmx?op=SaveProduct'; // Preferably write this out from server side

function beginSaveProduct(productID, productName, manufactureDate)

{

var soapMessage =

'<soap:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap="http://schemas.xmlsoap.org/soap/envelope/"> \

<soap:Body> \

<SaveProduct xmlns="http://sh.inobido.com/"> \

<productID>' + productID + '</productID> \

<productName>' + productName + '</productName> \

<manufactureDate>' + manufactureDate + '</manufactureDate> \

</SaveProduct> \

</soap:Body> \

</soap:Envelope>';

$.ajax({

url: productServiceUrl,

type: "POST",

dataType: "xml",

data: soapMessage,

complete: endSaveProduct,

contentType: "text/xml; charset=\"utf-8\""

});

return false;

}

function endSaveProduct(xmlHttpRequest, status)

{

$(xmlHttpRequest.responseXML)

.find('SaveProductResult')

.each(function()

{

var name = $(this).find('Name').text();

});

}

In order to call the web service, we need to supply an XML message that match the operation definition specified by the web service’s WSDL. With the operation’s schema already in hand, all that is required is to exchange the type names for the operation’s paramaters, with their actual values. The variable soapMessage contains the complete XML message that we’re going to send to the web service.

To make an Ajax request with jQuery you use the ajax method found on the jQuery object. The $/dollar sign is an alias for jQuery, the actual name of the object; the symbol just provides a shortcut to the jQuery object. The ajax method provides a wide range of options to manage low level Ajax tasks, but we’ll only cover the ones we’ve used here:

- url: Should be pretty obvious. This is the web service’s end-point URL. All I’ve done is instead of hard coding it, I assigned the URL to the variable productServiceUrl when I create the page’s HTML from the server side.

- type: The type of request we’re sending. jQuery uses “GET” by default. If you quickly take a look again at the SaveProduct operation’s definition, you will notice that on the 1st line it specifies that requests should use the “POST” HTTP method.

- dataType: The type of data that the response will send back. The usual types are available like html, json, etc. If you’re working with a SOAP web service, you need to specify the xml dataType.

- data: Another easy one. This is the actual data, as XML text, you will be sending to the web service.

- complete: The callback delegate that will be executed when the request completes. The callback method must implement the following signature: function (XMLHttpRequest, textStatus) { /* Code goes here */ } .

- contentType: A string representing the MIME content type of the request, in this case it’s “text/xml” because we’re working with a SOAP web service that expects XML data.

Now you’re ready to send your XML data off to the web service. Once the server finishes processing the request, the endSaveProduct method gets called. To process the XML response in jQuery, you need to know the SOAP reponse’s schema/definition. The SaveProduct web method has the following schema:

HTTP/1.1 200 OK

Content-Type: text/xml; charset=utf-8

Content-Length: length

<?xml version="1.0" encoding="utf-8"?>

<soap:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap="http://schemas.xmlsoap.org/soap/envelope/">

<soap:Body>

<SaveProductResponse xmlns="http://sh.inobido.com/">

<SaveProductResult>

<ID>int</ID>

<Name>string</Name>

<ManufactureDate>dateTime</ManufactureDate>

</SaveProductResult>

</SaveProductResponse>

</soap:Body>

</soap:Envelope>

From this schema it should be clear that the web method SaveProduct sends back the Product object that was saved. You will find the XML document/data on the xmlHttpRequest parameter’s responseXML property. From here you can use the usual jQuery methods to traverse the XML document’s nodes to extract the data.

The Joy, Blood, Sweat And Tears Of InfoPath 2007

Posted: June 13, 2009 Filed under: InfoTech, Office | Tags: C#, InfoPath, Microsoft Office, Mono.NET, MOSS 2007, SharePoint, SQL Server, SQL Server Native XML Web Services, SQL Web Services, Visual SourceSafe, XPath 14 Comments I recently completed a project based on InfoPath 2007 (Office client version) and Microsoft Office SharePoint Services 2007 (MOSS 2007). Looking back I can say that InfoPath has its uses, but before you build a solution around it you have to be very sure about its limitations. InfoPath has a number of limitations, especially with regards to submitting data, that aren’t that apparent at first sight. If you don’t watch out, you can quickly get caught up in what feels like a never ending maize of dead ends.

I recently completed a project based on InfoPath 2007 (Office client version) and Microsoft Office SharePoint Services 2007 (MOSS 2007). Looking back I can say that InfoPath has its uses, but before you build a solution around it you have to be very sure about its limitations. InfoPath has a number of limitations, especially with regards to submitting data, that aren’t that apparent at first sight. If you don’t watch out, you can quickly get caught up in what feels like a never ending maize of dead ends.

InfoPath is often pitched as a solution that doesn’t require writing custom code. This project was no different, and its time lines were made accordingly. In the end we had to write a fair amount of custom code, which was fun, but took more time.

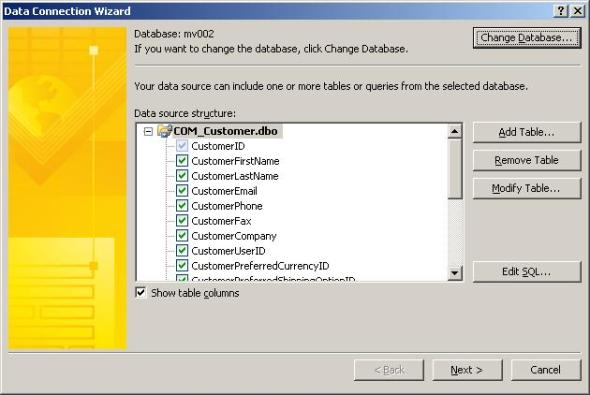

The Many Limitations Of InfoPath DataBase Data Source

InfoPath generally works well viewing standard enterprise data sources such as database tables or SharePoint lists. The limitations become apparent when you attempt to submit to a database using an InfoPath SQL connection, or perform advanced queries. There are a number of limitations when you work with an InfoPath SQL database data source/connection:

- Only submit to a single table. This excludes database data sources such as views, and queries with joins. You cannot submit to views or SQL DataSources with joins.

- To submit to a database you can only use the main data connection. In other words you can’t have a database-view as the main data source, and setup another simple single table select to submit to.

- Range queries are not possible. You can only use a field once in a query’s WHERE clause with an equality operator.

- SQL data source dependent on table schema. If a SQL data source’s underlying table is modified, even just adding a column (in other words InfoPath’s SELECT statement doesn’t actually change), the data source will break.

With SharePoint lists you cannot query the data source with queryfields like relational data sources.

The above limitations, especially regarding relational data sources, mean one thing: Web services are mandatory for working with your relational data. Using web services allows you to overcome all the limitations of the standard InfoPath SQL data source, and work with a consistent schema.

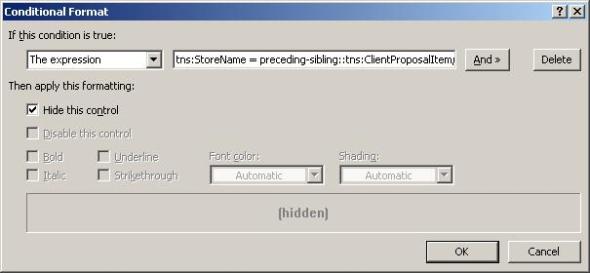

Another thing to watch out for is that InfoPath’s performance deteriorates quickly when you have more than 50 rows in your result set. Sometimes this figure is much lower. In the project I worked on the data was coming lightning fast from the data base through the web service. But when the data hits the form, and InfoPath starts parsing the XML document, it completely froze for quite a while. I have decided not to torture myself trying to page my form data, so I haven’t looked into this yet (and I believe InfoPath is not meant to be used in this manner). The quickest and most effective solution I could come up with is to allow users to load data into their form incrementally. How this works is that you’ll do a normal retrieve of your data from the data source, but instead of clearing the form, you’ll just add the new result set to the rest of the form’s data. The big drawback of this is that you need to write custom code to modify the XML document directly using XmlWriter: Not a too pleasant exercise.

public void Load_Clicked(object sender, ClickedEventArgs e)

{

// Call the web service of the secondary DataSource, which will populate it

DataSources["ClientWS2"].QueryConnection.Execute();

var clients = DataSources["ClientWS2"].CreateNavigator().Select("/dfs:myFields/dfs:dataFields/tns:GetClientsResponse/tns:GetClientsResult/tns:Client", NamespaceManager);

// The 1st time rows are added GetClientsResult might not exist, only GetClientsResponse

var main = MainDataSource.CreateNavigator().SelectSingleNode("/dfs:myFields/dfs:dataFields/tns:GetClientsResponse/tns:GetClientsResult", NamespaceManager);

if (main == null) main = MainDataSource.CreateNavigator().SelectSingleNode("/dfs:myFields/dfs:dataFields/tns:GetClientsResponse", NamespaceManager);

using (XmlWriter writer = main.AppendChild())

{

// Make sure we are adding Client elements to /dfs:myFields/dfs:dataFields/tns:GetClientsResponse/tns:GetClientsResult and not, /dfs:myFields/dfs:dataFields/tns:GetClientsResponse

if (main.LocalName == "GetClientsResponse")

{

// So if it doesn't exist, create it first

writer.WriteStartElement("GetClientsResult", "http://sh.inobido.com/CRM/Service");

}

while (clients.MoveNext())

{

writer.WriteStartElement("Client", "http://sh.inobido.com/CRM/Service");

// Select all the client element's child elements

var fields = proposals.Current.Select("*", NamespaceManager);

while (fields.MoveNext())

{

// Write each element and value to the Main DataSource

writer.WriteStartElement(fields.Current.Name, "http://sh.inobido.com/CRM/Service");

writer.WriteString(fields.Current.Value);

writer.WriteEndElement();

}

writer.WriteEndElement();

}

if (main.LocalName == "GetClientsResponse)

{

writer.WriteEndElement();

}

writer.Close();

}

}

The above event fires when a user clicks the Load button. The trick to load data incrementally is that you need a second DataSource exactly the same as the Main DataSource (they should point to the same data store). Whenever you call DataSource.QueryConnection.Execute() InfoPath will wipe any previous data from that DataSource, and reload it with the new data. That’s why you need a separate second DataSource that you call Execute on, and then copy that data to the Main DataSource. The end result is the Main DataSource doesn’t lose its data, but data gets added to it on each query.

Just another side note on InfoPath: Pivot tables are not possible, because you have to know exactly which columns your binding to at design time, and cannot create columns dynamically at runtime. This shouldn’t be a show stopper to most projects, but I’m just mentioning it. All the InfoPath forms we had to do came from Excel spreadsheets, and the one spreadsheet was a monster pivot table.

Hacking The DataConnection

It’s possible to query a data connection directly from InfoPath, change the SQL command dynamically, or extract the connection string. The biggest drawback of this hack (apart from being a hack, i.e. not recommended) is that it requires FullTrust and Sql Code Access Security (CAS) permissions. That means you have to certify your InfoPath form, or create an installer so users have to install it locally onto their machines. This doesn’t really work well when the form is made available to users through a SharePoint document library.